About Me

Zehan Wang (王泽寒) is a PhD student in the College of Computer Science at Zhejiang University, supervised by Prof. Zhou Zhao. And I also fortunate to collaborate with Prof. Hengshuang Zhao from The University of Hong Kong closely.

My current research interests broadly span Spatial Intelligence, from 3D/4D Perception & Understanding to Generative World Model. I have published 10+ first-author papers at the top international AI conferences such as NeurIPS/ICLR/ICML/CVPR.

I won the Chu Kochen Presidential Scholarship (highest honor at Zhejiang University). My research is supported by the Fundamental Research Project for Young Ph.D. students from NSFC (国家自然科学基金博士青年基金) and CIE-Tencent Doctoral Research Incentive Project (中国电子学会——腾讯博士生科研激励计划).

🔥 News

- 2025.10: 3 papers are accepted by NeurIPS 2025! (Orient Anything v2, GenSpace)

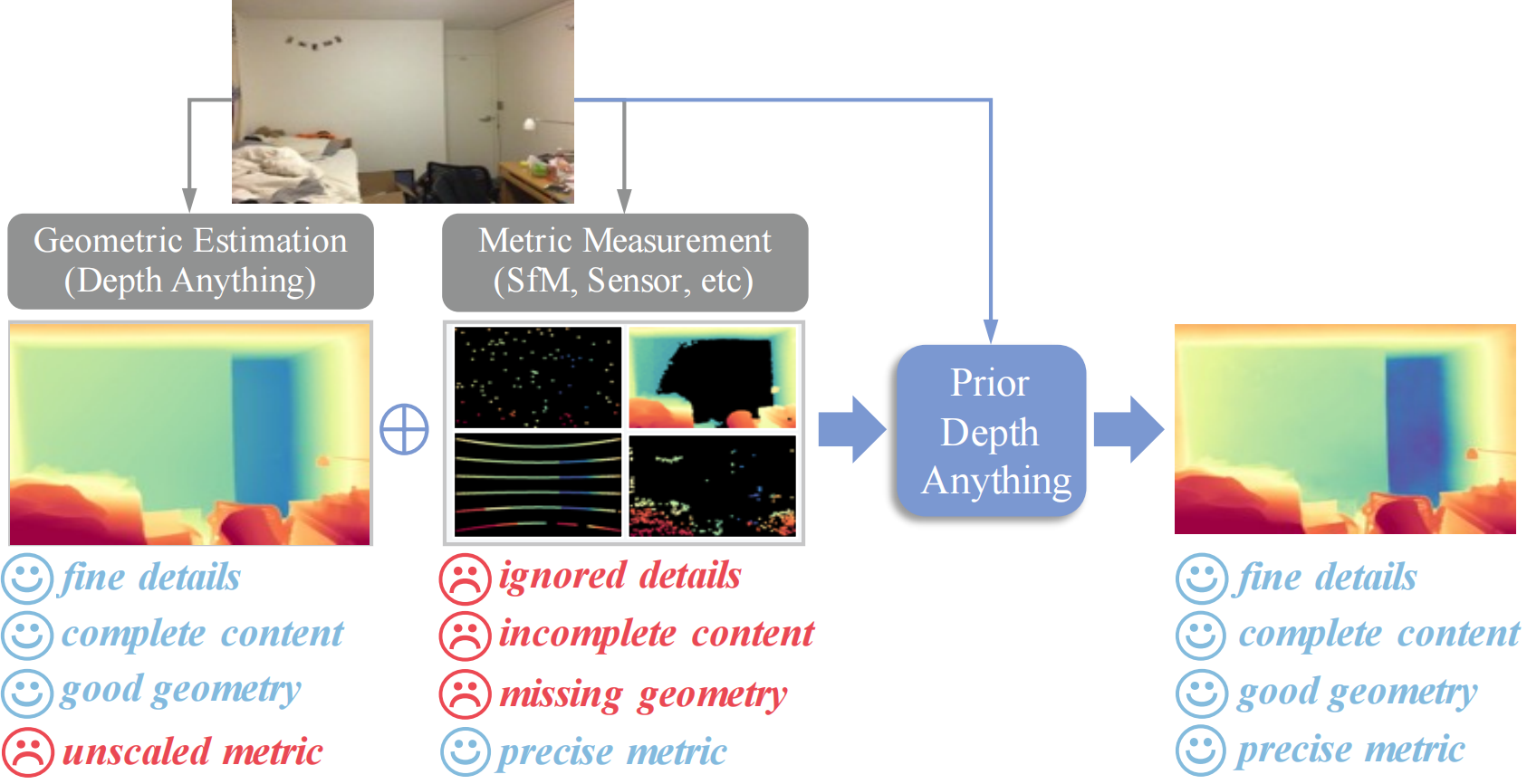

- 2025.05: We release Prior Depth Anything, estimate fine&complete metric depth with coarse&incomplete depth measurement.

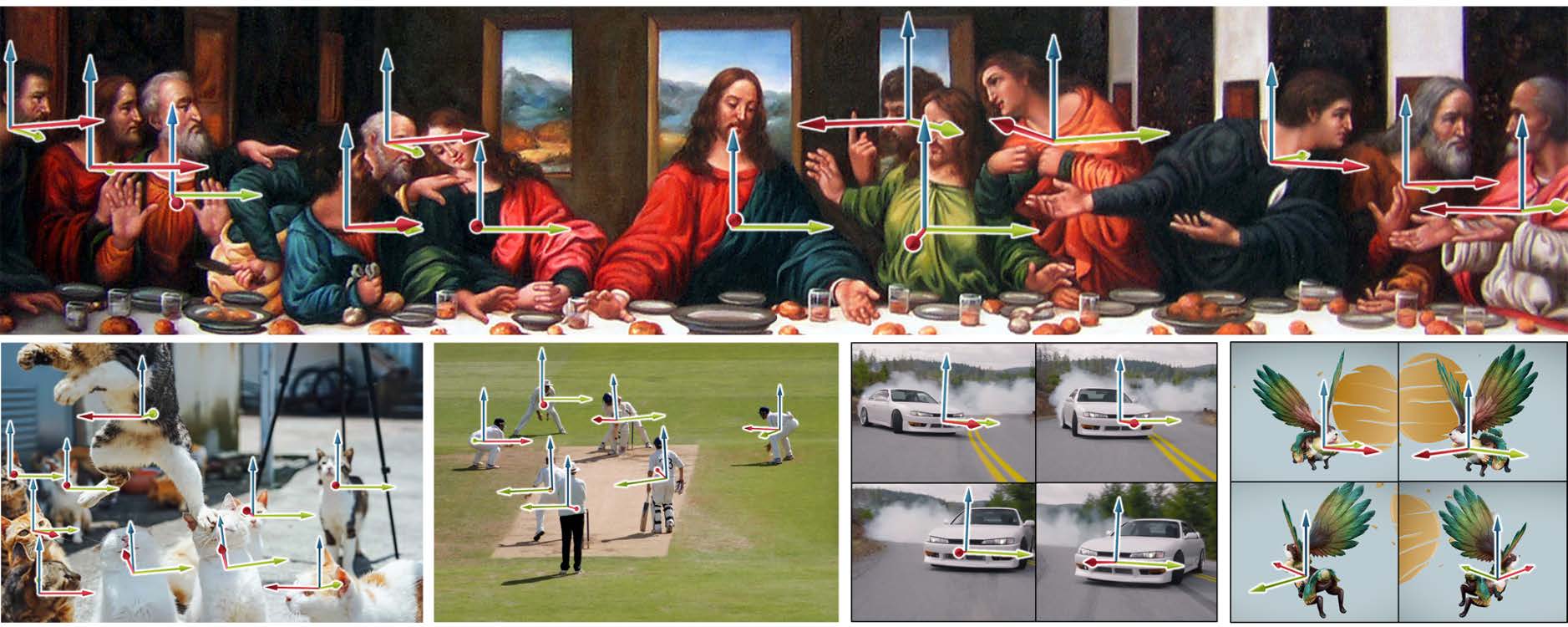

- 2025.05: 1 paper accepted by ICML 2025! (Orient Anything)

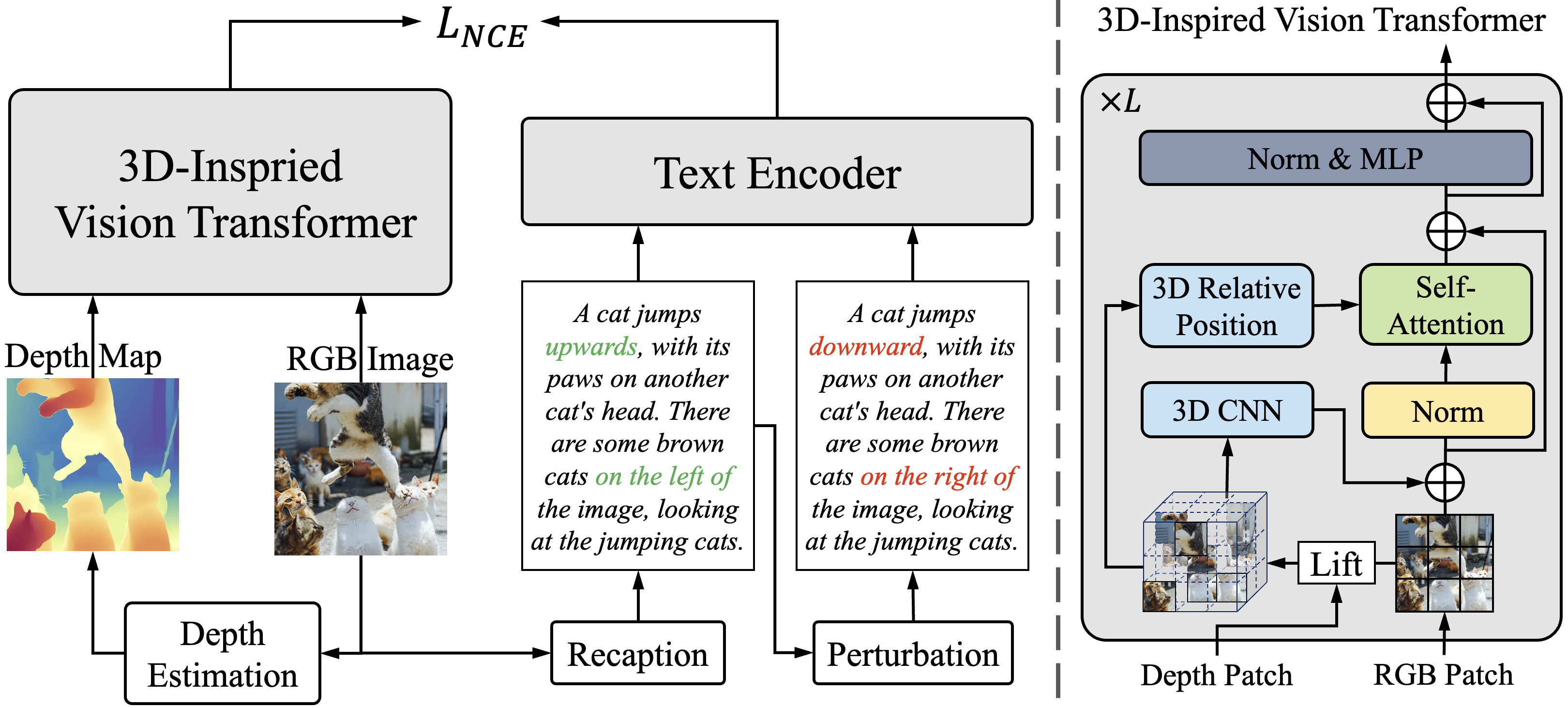

- 2025.02: 2 papers accepted by CVPR 2025! (SpatialCLIP)

- 2025.01: 6 papers are accepted by ICLR 2025! (OmniBind)

- 2024.12: We release Orient Anything, the foundation model to estimate object orientation in images.

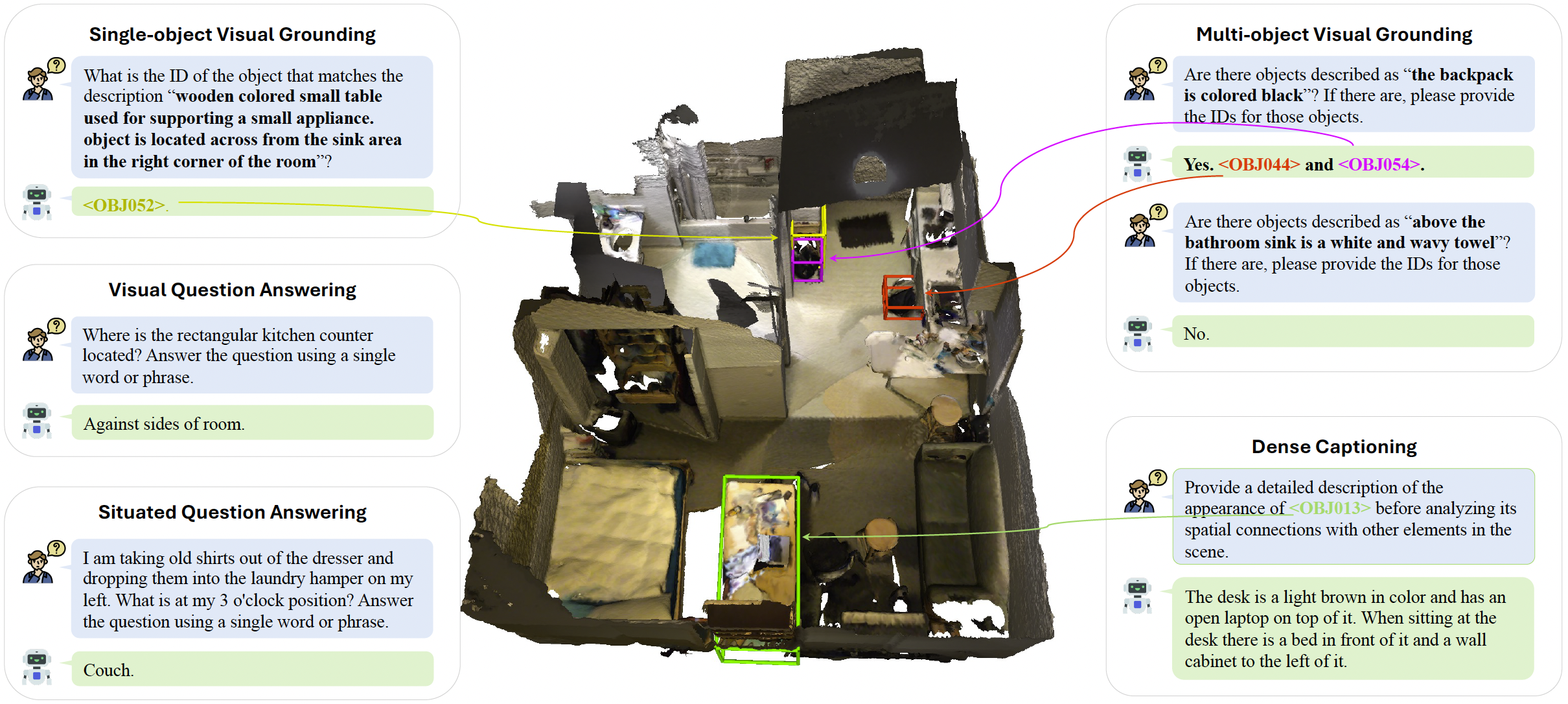

- 2024.10: 6 papers are accepted by NeurIPS 2024! (Chat-Scene and Ex-MCR)

- 2024.05: 2 papers are accepted by ICML 2024! (FreeBind)

- 2023.09: 1 paper is accepted by NeurIPS 2023! (C-MCR)

- 2023.06: 2 papers are accepted by ICCV 2023! (WS-3DVG)

📝 Representative Publications

3D/4D Perception & Understanding

- 3D/4D Preception Foundation Model: Orient Anything v2 (NeurIPS 2025), Orient Anything (ICML 2025), Prior Depth Anything

- 3D/4D Understanding MLLMs: SpatialCLIP (CVPR 2025), Chat-Scene (NeurIPS 2024), Chat-3D (NAACL 2023)

- Unified Multimodal Representations: C-MCR (NeurIPS 2023), Ex-MCR (NeurIPS 2024), FreeBind (ICML 2024), OmniBind (ICLR 2025)

Generative World Model:

- World Model: Post-training for World Model (Working on)

- 3D-aware Visual Generation: SpatialHand, GenSpace (NeurIPS 2025)

I am currently highly interested in the synergy between perception and generation for spatial intelligence. I am exploring: 1. Employing 3D/4D perception foundation models as reward models to enhance content generation. 2. Utilizing generative models to produce imaginative content that aids 3D/4D perception.

- Depth Anything with Any Prior. Zehan Wang, Siyu Chen, Lihe Yang, Jialei Wang, Ziang Zhang, Hengshuang Zhao, Zhou Zhao Arxiv, 2025

- The SoTA zero-shot depth estimation model that can integrate any form of depth measurement as prior.

- Orient Anything: Learning Robust Object Orientation Estimation from Rendering 3D Models. Zehan Wang, Ziang Zhang, Tianyu Pang, Chao Du, Hengshuang Zhao, Zhou Zhao ICML, 2025

- The first zero-shot image-based object orientation estimation model.

- SpatialCLIP: Learning 3D-aware Image Representations from Spatially Discriminative Language. Zehan Wang, Sashuai zhou, Shaoxuan He, Haifeng Huang, Lihe Yang, Ziang Zhang, Xize Cheng, Shengpeng Ji, Tao Jin, Hengshuang Zhao, Zhou Zhao CVPR, 2025

- Improving spatial intelligence of MLLM by enhancing the CLIP visual representations.

- Chat-3d: Data-efficiently tuning large language model for universal dialogue of 3d scenes Zehan Wang*, Haifeng Huang*, Yang Zhao, Ziang Zhang, Zhou Zhao NAACL 2025

- Chat-scene: Bridging 3d scene and large language models with object identifiers Haifeng Huang*, Yilun Chen*, Zehan Wang*, Rongjie Huang, Runsen Xu, Tai Wang, Luping Liu, Xize Cheng, Yang Zhao, Jiangmiao Pang, Zhou Zhao NeurIPS 2024

- Series of state-of-the-art 3D MLLM for scene understanding.

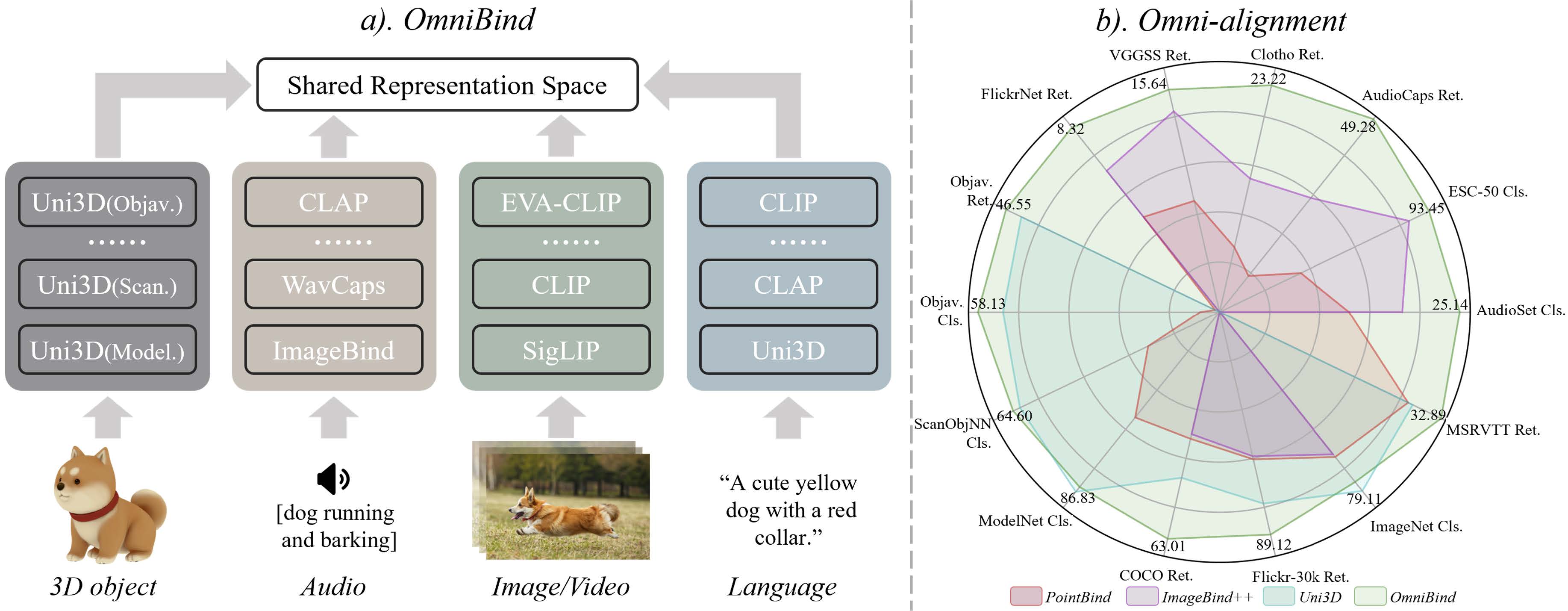

- Omnibind: Large-scale omni multimodal representation via binding spaces Zehan Wang, Ziang Zhang, Hang Zhang, Luping Liu, Rongjie Huang, Xize Cheng, Hengshuang Zhao, Zhou Zhao ICLR 2025

- Large-scale 3D-audio-image-language representation models (7B–30B parameters) achieving SoTA performance on 13 benchmarks.

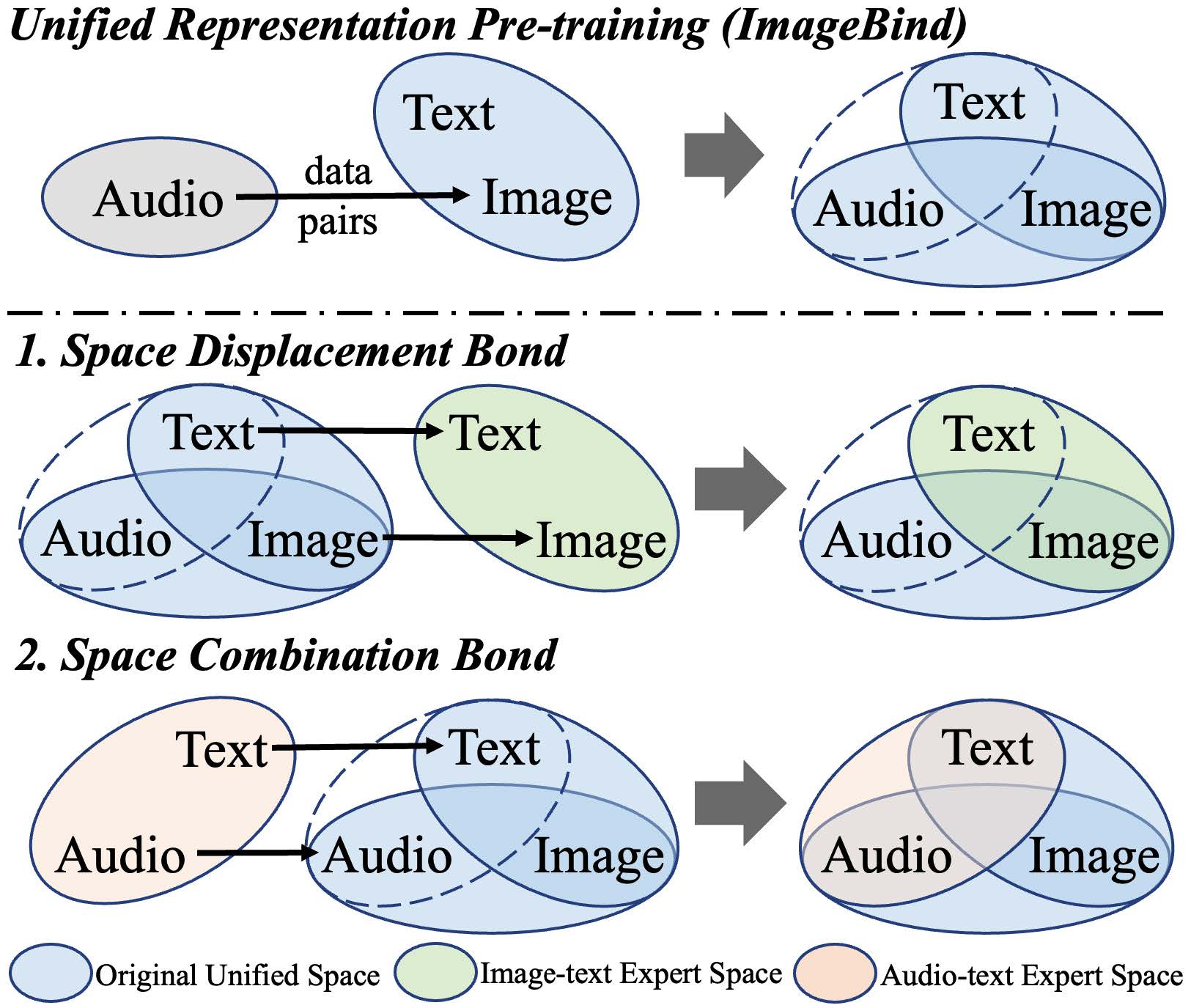

- FreeBind: Free Lunch in Unified Multimodal Space via Knowledge Fusion Zehan Wang, Ziang Zhang, Xize Cheng, Rongjie Huang, Luping Liu, Zhenhui Ye, Haifeng Huang, Yang Zhao, Tao Jin, Peng Gao, Zhou Zhao ICML 2024

- Enhancing multimodal representations (e.g., ImageBind, InternVL) by freely fusing them in a unified space.

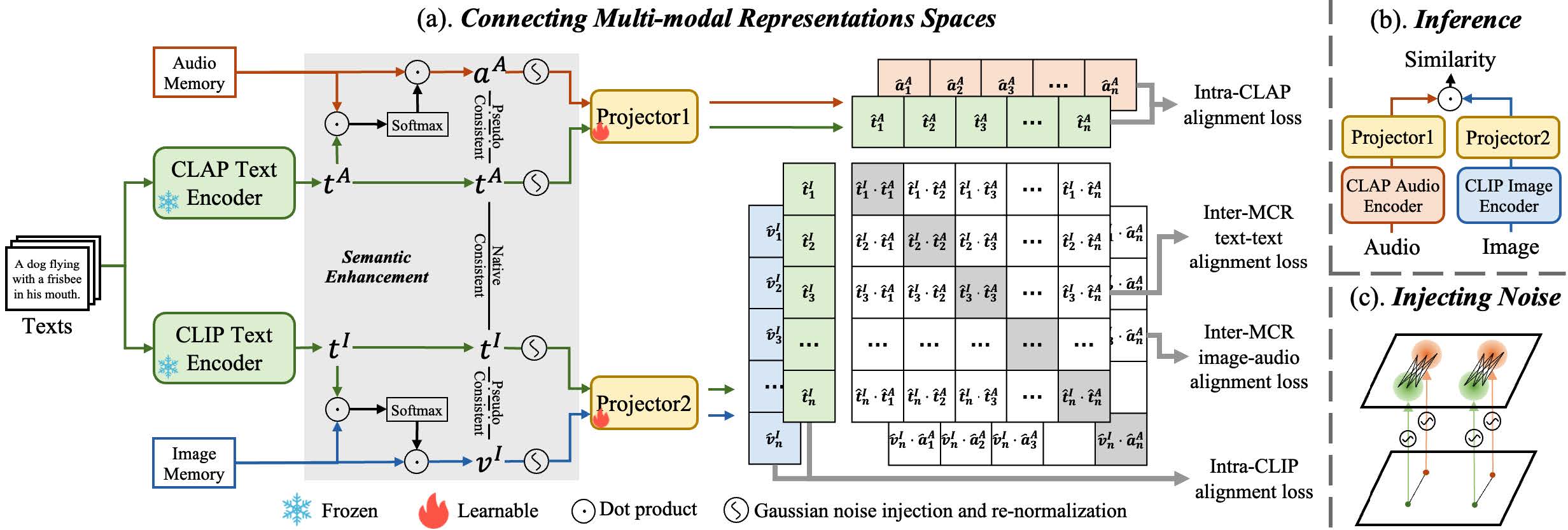

- Connecting Multi-modal Contrastive Representations Zehan Wang, Yang Zhao, Xize Cheng, Haifeng Huang, Jiageng Liu, Li Tang, Linjun Li, Yongqi Wang, Aoxiong Yin, Ziang Zhang, Zhou Zhao NeurIPS 2023

- Learning multimodal contrastive representations without requiring paired data.

Full Publication List

2025

ICML 2025Orient Anything: Learning Robust Object Orientation Estimation from Rendering 3D Models Zehan Wang, Ziang Zhang, Tianyu Pang, Chao Du, Hengshuang Zhao, Zhou ZhaoArxiv 2025OmniChat: Enhancing Spoken Dialogue Systems with Scalable Synthetic Data for Diverse Scenarios Zehan Wang, Haifeng Huang, Yang Zhao, Ziang Zhang, Zhou ZhaoCVPR 2025SpatialCLIP: Learning 3D-aware Image Representations from Spatially Discriminative Language. Zehan Wang, Sashuai zhou, Shaoxuan He, Haifeng Huang, Lihe Yang, Ziang Zhang, Xize Cheng, Shengpeng Ji, Tao Jin, Hengshuang Zhao, Zhou ZhaoCVPR 2025RoboGround: Robot Manipulation with Grounded Vision-Language Priors Haifeng Huang, Xinyi Chen, Yilun Chen, Hao Li, Xiaoshen Han, Zehan Wang, Tai Wang, Jiangmiao Pang, Zhou ZhaoNAACL 2025Chat-3d: Data-efficiently tuning large language model for universal dialogue of 3d scenes Zehan Wang*, Haifeng Huang*, Yang Zhao, Ziang Zhang, Zhou ZhaoWWW 2025EAGER-LLM: Enhancing Large Language Models as Recommenders through Exogenous Behavior-Semantic Integration Minjie Hong, Yan Xia, Zehan Wang, Jieming Zhu, Ye Wang, Sihang Cai, Xiaoda Yang, Quanyu Dai, Zhenhua Dong, Zhimeng Zhang, Zhou ZhaoICLR 2025Omnibind: Large-scale omni multimodal representation via binding spaces Zehan Wang, Ziang Zhang, Hang Zhang, Luping Liu, Rongjie Huang, Xize Cheng, Hengshuang Zhao, Zhou ZhaoICLR 2025Improving Long-Text Alignment for Text-to-Image Diffusion Models Luping Liu, Chao Du, Tianyu Pang, Zehan Wang, Chongxuan Li, Dong XuICLR 2025OmniSep: Unified Omni-Modality Sound Separation with Query-Mixup Xize Cheng, Siqi Zheng, Zehan Wang, Minghui Fang, Ziang Zhang, Rongjie Huang, Ziyang Ma, Shengpeng Ji, Jialong Zuo, Tao Jin, Zhou ZhaoICLR 2025VoxDialogue: Can Spoken Dialogue Systems Understand Information Beyond Words? Xize Cheng, Ruofan Hu, Xiaoda Yang, Jingyu Lu, Dongjie Fu, Zehan Wang, Shengpeng Ji, Rongjie Huang, Boyang Zhang, Tao Jin, Zhou ZhaoICLR 2025Wavtokenizer: an efficient acoustic discrete codec tokenizer for audio language modeling Shengpeng Ji, Ziyue Jiang, Wen Wang, Yifu Chen, Minghui Fang, Jialong Zuo, Qian Yang, Xize Cheng, Zehan Wang, Ruiqi Li, Ziang Zhang, Xiaoda Yang, Rongjie Huang, Yidi Jiang, Qian Chen, Siqi Zheng, Zhou ZhaoICLR 2025Diff-prompt: Diffusion-driven prompt generator with mask supervision Weicai Yan, Wang Lin, Zirun Guo, Ye Wang, Fangming Feng, Xiaoda Yang, Zehan Wang, Tao Jin

2024

NeurIPS 2024Chat-scene: Bridging 3d scene and large language models with object identifiers Haifeng Huang*, Yilun Chen*, Zehan Wang*, Rongjie Huang, Runsen Xu, Tai Wang, Luping Liu, Xize Cheng, Yang Zhao, Jiangmiao Pang, Zhou ZhaoNeurIPS 2024Extending multi-modal contrastive representations Ziang Zhang*, Zehan Wang*, Luping Liu, Rongjie Huang, Xize Cheng, Zhenhui Ye, Wang Lin, Huadai Liu, Haifeng Huang, Yang Zhao, Tao Jin, Siqi Zheng, Zhou ZhaoNeurIPS 2024MimicTalk: Mimicking a personalized and expressive 3D talking face in minutes Zhenhui Ye, Tianyun Zhong, Yi Ren, Ziyue Jiang, Jiawei Huang, Rongjie Huang, Jinglin Liu, Jinzheng He, Chen Zhang, Zehan Wang, Xize Chen, Xiang Yin, Zhou ZhaoNeurIPS 2024Lumina-Next: Making Lumina-T2X Stronger and Faster with Next-DiT Le Zhuo, Ruoyi Du, Han Xiao, Yangguang Li, Dongyang Liu, Rongjie Huang, Wenze Liu, Lirui Zhao, Fu-Yun Wang, Zhanyu Ma, Xu Luo, Zehan Wang, Kaipeng Zhang, Xiangyang Zhu, Si Liu, Xiangyu Yue, Dingning Liu, Wanli Ouyang, Ziwei Liu, Yu Qiao, Hongsheng Li, Peng GaoNeurIPS 2024Frieren: Efficient Video-to-Audio Generation with Rectified Flow Matching Yongqi Wang, Wenxiang Guo, Rongjie Huang, Jiawei Huang, Zehan Wang, Fuming You, Ruiqi Li, Zhou ZhaoNeurIPS 2024Action Imitation in Common Action Space for Customized Action Image Synthesis Wang Lin, Jingyuan Chen, Jiaxin Shi, Zirun Guo, Yichen Zhu, Zehan Wang, Tao Jin, Zhou Zhao, Fei Wu, YAN Shuicheng, Hanwang ZhangICML 2024FreeBind: Free Lunch in Unified Multimodal Space via Knowledge Fusion Zehan Wang, Ziang Zhang, Xize Cheng, Rongjie Huang, Luping Liu, Zhenhui Ye, Haifeng Huang, Yang Zhao, Tao Jin, Peng Gao, Zhou ZhaoICML 2024InstructSpeech: Following Speech Editing Instructions via Large Language Models Rongjie Huang, Ruofan Hu, Yongqi Wang, Zehan Wang, Xize Cheng, Ziyue Jiang, Zhenhui Ye, Dongchao Yang, Luping Liu, Peng Gao, Zhou ZhaoACL 2024Make-a-voice: Revisiting voice large language models as scalable multilingual and multitask learners Rongjie Huang, Chunlei Zhang, Yongqi Wang, Dongchao Yang, Jinchuan Tian, Zhenhui Ye, Luping Liu, Zehan Wang, Ziyue Jiang, Xuankai Chang, Jiatong Shi, Chao Weng, Zhou Zhao, Dong YuACL 2024TransFace: Unit-Based Audio-Visual Speech Synthesizer for Talking Head Translation Xize Cheng, Rongjie Huang, Linjun Li, Tao Jin, Zehan Wang, Aoxiong Yin, Minglei Li, Xinyu Duan, Zhou ZhaoACM MM 2024VoiceTuner: Self-Supervised Pre-training and Efficient Fine-tuning For Voice Generation Rongjie Huang, Yongqi Wang, Ruofan Hu, Xiaoshan Xu, Zhiqing Hong, Dongchao Yang, Xize Cheng, Zehan Wang, Ziyue Jiang, Zhenhui Ye, Luping Liu, Siqi Zheng, Zhou Zhao

2023

NeurIPS 2023Connecting Multi-modal Contrastive Representations. Zehan Wang, Yang Zhao, Xize Cheng, Haifeng Huang, Jiageng Liu, Li Tang, Linjun Li, Yongqi Wang, Aoxiong Yin, Ziang Zhang, Zhou Zhao. NeurIPS 2023ICCV 2023Distilling Coarse-to-Fine Semantic Matching Knowledge for Weakly Supervised 3D Visual Grounding. Zehan Wang, Haifeng Huang, Yang Zhao, Linjun Li, Xize Cheng, Yichen Zhu, Aoxiong Yin, Zhou Zhao. ICCV 2023ICCV 2023MixSpeech: Cross-Modality Self-Learning with Audio-Visual Stream Mixup for Visual Speech Translation and Recognition Xize Cheng, Tao Jin, Rongjie Huang, Linjun Li, Wang Lin, Zehan Wang, Ye Wang, Huadai Liu, Aoxiong Yin, Zhou Zhao. ICCV 2023EMNLP 20233DRP-Net: 3D Relative Position-aware Network for 3D Visual Grounding Zehan Wang, Haifeng Huang, Yang Zhao, Linjun Li, Xize Cheng, Yichen Zhu, Aoxiong Yin, Zhou Zhao. EMNLP 2023ACL 2023Scene-robust natural language video localization via learning domain-invariant representations Zehan Wang, Yang Zhao, Haifeng Huang, Yan Xia, Zhou Zhao. ACL 2023

📖 Educations

-

2022.09 - Present, Ph.D Student, Zhejiang University, Hangzhou.

-

2018.09 - 2022.06, Undergraduate, Zhejiang Univeristy, Hangzhou.

🎖 Honors and Awards

- 2025: Chu Kochen Presidential Scholarship, highest honor at Zhejiang University

- 2025: National Scholarships

- 2025: CIE-Tencent Doctoral Research Incentive Project (中国电子学会——腾讯博士生科研激励计划)

- 2025: Fundamental Research Project for Young Ph.D. students from NSFC (国家自然科学基金博士青年基金)

- 2022: Excellent Graduate, Zhejiang Province

- 2020: National Scholarships

- 2019: National Scholarships